How we can harness machine learning and artificial intelligence to address violence against women

Now more than ever, technology is a deeply engrained part of our lives. We harness the power of artificial intelligence and machine learning throughout our day, from picking tonight’s movie on Netflix to shopping for the perfect water bottle on Amazon. But did you know that technology, specifically that which relies on Artificial Intelligence (AI), is helping end domestic violence on multiple fronts?

Today we’ll be taking a closer look at two ways AI has been used recently to support survivors of domestic violence and how we can continue to harness the power of technology to eliminate violence against women.

Coordinating Care

One recent effort to use AI to support women experiencing violence is chatbots that can rapidly connect women to support if they are attempting to flee an abuser. With between 50-75% of femicides related to domestic violence occurring when a woman leaves an abuser, these chatbots can quickly and efficiently connect women to help before it is too late.

When a woman decides she is ready to leave an abuser, she needs a quick and clear list of options for care that are available to her. Moreover, placing a voice call or searching up resources on a shared computer can place her at greater risk of harm.

This is where AI chatbots come in. With the correct training, the chatbot can quickly appraise a woman’s situation by asking questions about potential risk factors, like an offender with a gun.

Once the chatbot is aware of potential risk factors, it can then offer a woman a list of options for care quickly and concisely. These options may include emergency resources such as phone numbers of transition houses or access to less urgent services like facilities that offer free storage for women fleeing violence.

The importance of the human touch

This is not to say that care for survivors of violence against women should be completely automized. Counselors play an essential role in the healing process for many survivors, and their expertise can never be replicated by a chatbot.

One area where AI fails is applying common sense or thinking in the abstract. AI can often process data faster and more efficiently than the human brain, but when it comes to making complex decisions, these systems begin to fall apart. It is imperative then, that human intelligence and AI are seen as complements, not substitutes.

Chatbots instead should be used to bridge the gap, and connect survivors to trained professionals. These chatbots are one of the many tools we can use to support survivors of violence against women, and if they save at least one woman’s life, then they’re worth pursuing.

Underestimating the threat

Calling law enforcement after experiencing abuse is a highly personal choice. There are many reasons women choose to (or choose not to) call law enforcement officials and statutory authorities.

One reason for not calling law enforcement officials that we often hear from our clients is that they underestimate the severity of the violence they are experiencing. Especially in cases of emotional and financial abuse, bodies of authority frequently fail to understand the gravity of abuse until it is too late.

And if law enforcement officials don’t understand how severe the abuse a woman is experiencing is, they are unlikely to respond in an effective way. This leaves survivors at a continued risk of violence, or even femicide.

Underestimating the violence experienced by women is emblematic of the inherent misogyny in our society. But it also reflects an inability on the part of law enforcement officials to effectively synthesize information provided by survivors.

A better response

An estimated 11.8% of women who call law enforcement regarding domestic violence will call again within a year after experiencing further violence. To protect women, it is critical that law enforcement agents find a way to predict repeated attacks with greater accuracy.

Enter machine learning. Recent research conducted by the London School of Economics has found that machine learning approaches (compared to standard questionnaires) can predict which women are most likely to experience repeated violence with significantly greater accuracy.

These approaches rely on technology to quickly synthesize existing facts surrounding a case, such as an abuser’s criminal record, other calls made to police, and aspects of abuse like access to a firearm. When officers arrive on scene, they are equipped with more comprehensive information regarding a case, which means they can be of greater assistance to survivors.

By the numbers

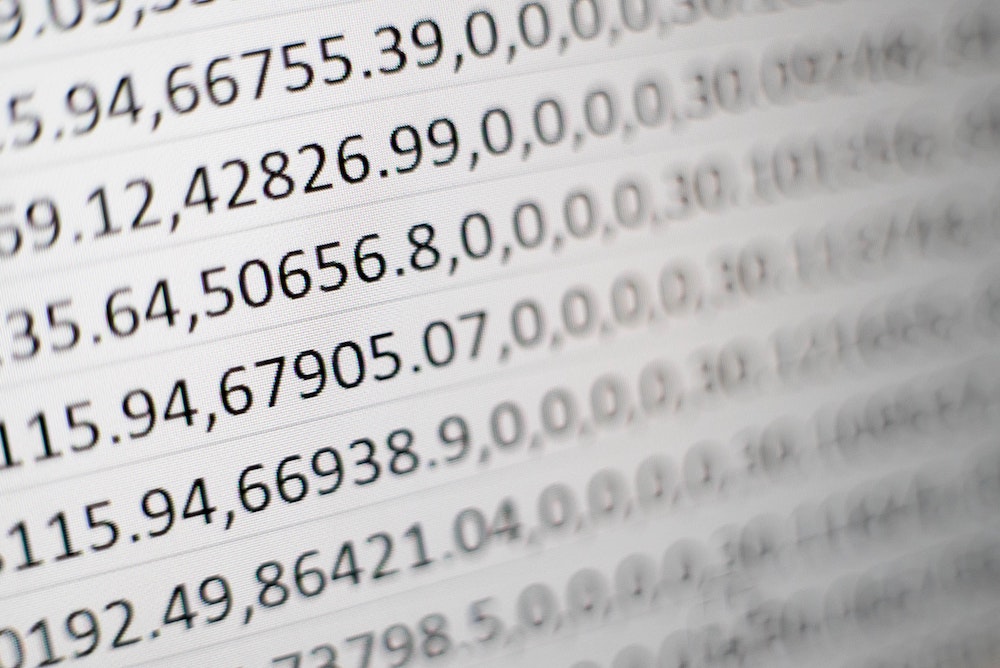

The LSE research team looked at 16,203 cases of violence against women in which women chose to call the police. Of these, 1,702 cases were falsely classified as “low risk situations” by traditional questionnaires.

These 1,702 women experienced repeated instances of violence even though traditional questionnaires predicted they wouldn’t. This means that traditional questionnaires have a negative prediction rate of 11.5%.

When law enforcement agencies made the switch to machine learning approaches, the results were significantly better than before. Although the model still misclassified 468 situations as low risk, this switch dropped the negative prediction rate to only 6.1%.

Every interval of decrease in the negative prediction rate represents a woman who can now receive the support from law enforcement she needs. Thanks to machine learning, law enforcement can now more effectively fight to end violence against women.

Women and technology

We’ve seen that in the cases of connecting survivors to support resources and giving law enforcement better tools to conduct risk assessments, machine learning and artificial intelligence are highly effective tools. But with great power, comes great responsibility.

Artificial intelligence operates on and learns from the data it is provided… by humans. And with humans, comes human bias.

It is especially important to note that in some countries, like Germany, women only compose 16% of the AI talent pool. With such a disproportionate share of men writing algorithms and choosing which data to provide, it’s inevitable that this technology will be biased against women in some ways.

For example, some medical apps rely on AI to predict, based on existing data, whether a user is having a heart attack. Because it is mostly men who teach the app what symptoms to associate with a heart attack, they may forget to include symptoms that affect women more, such as a sore arm or jaw.

If more women were involved in the process of creating this algorithm, the app could be of greater service to women by incorporating these gendered symptoms. But without diversity in the AI talent pool, women will continue to be marginalized and even harmed by machine learning technology.

If we truly want to harness the power of AI to help us end violence against women, it is imperative that the experiences of survivors and advocates are reflected in the algorithms backing this technology.

Towards a better future

At Dixon, we’re always excited to learn about new ways to serve women and their children fleeing violence.

If you’re a supporter in the tech industry who shares our goal of eliminating barriers to create a world free of violence against women, we’d love to chat about how we can work together!